[AINews] Welcome Interconnects and OpenRouter • ButtondownTwitterTwitter

Chapters

High Level Discord Summaries

Discord Summary

DiscoResearch Discord

Model Merging

TheBloke Coding Messages

Nous Research AI Project Obsidian

LlamaIndex - General Discussion

Research and Discussions in LAION Channel

OpenRouter (Alex Atallah) ▷ #general (152 messages🔥🔥)

CUDA Mode - Torch

CUDA MODE ▷ #algorithms

LLM Plugin and Improvements in Streaming, Packaging Tutorial, Chat UI, and GPU Choice

LLM Perf Enthusiasts AI Discussion Highlights

High Level Discord Summaries

High Level Discord Summaries

-

TheBloke Discord Summary: Engineers are troubleshooting Polymind's UI bug and discussing model comparisons and optimizations like Mistral AI and GGUF quantized models. LangChain's context reordering feature and Mistral AI's open-source models are also in focus.

-

LM Studio Discord Summary: Discussions include the announcement of a new Spanish LLM, hardware experiments with RTX 2060 setups, resolving WSL networking issues, and highlighting Piper, a neural text-to-speech system. Users are also discussing quantization parameters for optimizing LLM performance.

Discord Summary

EBDI Agent Challenges and Solutions: @.braydie explored EBDI frameworks for agent goal determination, but encountered thinking loops after integrating the ReAct framework. They examined decision-making models from a JASSS paper to address the issue.

Mistral Steps Up to Rival GPT-4: A TechCrunch article reported that Mistral Large, a new model from Mistral AI, is positioned to compete with OpenAI's GPT-4, offering cost-effectiveness and uncensored content, and is now available on Azure.

Prompt Protection Paradox: Users deliberated on how to protect intellectual property in prompts, concluding that while copyright might cover exact wording, the replication of ideas via linguistic variation is likely unstoppable.

Text Classification Tactics: @crifat kicked off a discussion on text classification methods, opting to start with the base model and Assistant, bypassing fine-tuning, to sort texts into categories such as "Factual" and "Misleading."

Meta-Prompting Generates Buzz and Security Concerns: The concept of meta-prompting was a hot topic, with claims of generating extensive documentation from advanced techniques, but these techniques also raised security flags when a user shared a PDF, resulting in the user's account action.

DiscoResearch Discord

An academic paper critiquing current evaluation methods for Large Language Models was shared, sparking debates on mismatched capabilities. Hidden null string issues during dataset conversion were discovered, showcasing the complexities in data preparation. Discussions around Retrieval-Augmented Generation (RAG) bots for codebases integrated various technologies like Git loaders and OpenAI embeddings, advancing developer-assistant tools. Joint end-to-end optimization for RAG and LLMs using gradients was explored, referencing the LESS paper for improved training examples selection. EQ-Bench expanded with German language support, leading to discussions on language fluency and model benchmarking nuances.

Model Merging

User @jsarnecki reported garbled output when merging Orca-2-13b with WhiteRabbitNeo-13b using a SLERP merge method, resulting in output like \)\. They included the MergeKit Produced Readme with details about the problematic merge.</li> <strong>Merging Details Revealed in Readme</strong>: The readme provided by @jsarnecki states the use of mergekit for creating Orca-2-Neo-13b, a merge of Orca-2-13b and WhiteRabbitNeo-Trinity-13B using SLERP over 40 layers of each model

TheBloke Coding Messages

- AI's Future in Decompilation: @mrjackspade expressed excitement about the prospects of AI-assisted decompilation, looking forward to the day when manual reconstruction of obfuscated decompiled code won't be necessary.

- The Struggle with Obfuscated Code: @mrjackspade voiced frustration over reconstructing obfuscated decompiled code by hand and hinted at the potential ease of generating datasets for AI training from open-source projects.

- Invitation to Test Summarization Script: @wolfsauge shared an update to their summarize script and is seeking someone to test it with a large model, mentioning it works well with Mistral 7b instruct v0.2 at fp16 in vLLM. The script is available on GitHub.

Nous Research AI Project Obsidian

User expressed gratitude and acknowledgment within the project group. Discussions included exploring hyperparameters for high performance, Mistral model behavior analysis, and utilization of self-extension with Nous's fine-tune of Solar 10.7B. Users discussed the magic of quantization, the demand for self-extend configurations, and the interest in Mistral Large's capabilities. Links were shared regarding the Nous-Hermes-2-SOLAR-10.7B-GGUF model.

LlamaIndex - General Discussion

Open-Source Text Generation with Llama2:

- Working on a project with CSV and PDF inputs using Llama2 model.

Building an OSS SDK Assistant with RAG:

- Inquiry about developing a RAG for assisting OSS SDK users.

Exploring Encoder-Based Diffusion for Stable Diffusion:

- Discussion on an encoder-based method for stable diffusion.

The Dilemma of DARPA Funded Projects and AI:

- Satirical debate on DARPA-supported AI research.

Debating on Quantum Computing's Impact on AI:

- Conversation on quantum computing's potential for processing transformer AI models.

Content Moderation Challenges in AI:

- Deep dive into issues of content moderation and addressing CSAM.

Discussing Open Source vs. Proprietary AI Models:

- Comparison of open-source models like Mistral Large with proprietary models.

Research and Discussions in LAION Channel

LAION ▷ research (15 messages🔥):

- Radioactivity in Language Models: @thejonasbrothers shared a research paper on detecting training data in language models using watermarked synthetic instructions. The study revealed that even 5% watermarked text can be detected with high confidence.

- DeepMind's Genie and Humanoid Robotics: @vrus0188 discussed Google DeepMind's advancements in humanoid robotics.

- Finite Element Analysis Learning: @wyndyl inquired about learning with Finite Element Analysis, with @itali4no sharing a relevant paper.

- Challenge with Inverse Discrete Fourier Transform: @mkaic shared issues with inverse Fourier transform in neural network synthesis.

- Educational Content Alert: @chad_in_the_house shared EleutherAI update but with a nonfunctional link.

LAION ▷ learning-ml (1 messages):

- Exploring Transformer Learning Capabilities: @phryq raised experiments on what transformers can learn regarding object size relations, but no further discussion ensued.

Summary Links:

- Watermarking Makes Language Models Radioactive: Investigates detectability of training data in language models.

- The AI 'Genie' is Out + Humanoid Robotics Step Closer: Discusses Google DeepMind's new advancements in humanoid robotics.

- abacus/src/interpolators.py: Investigates activation interpolation for sparse neural networks.

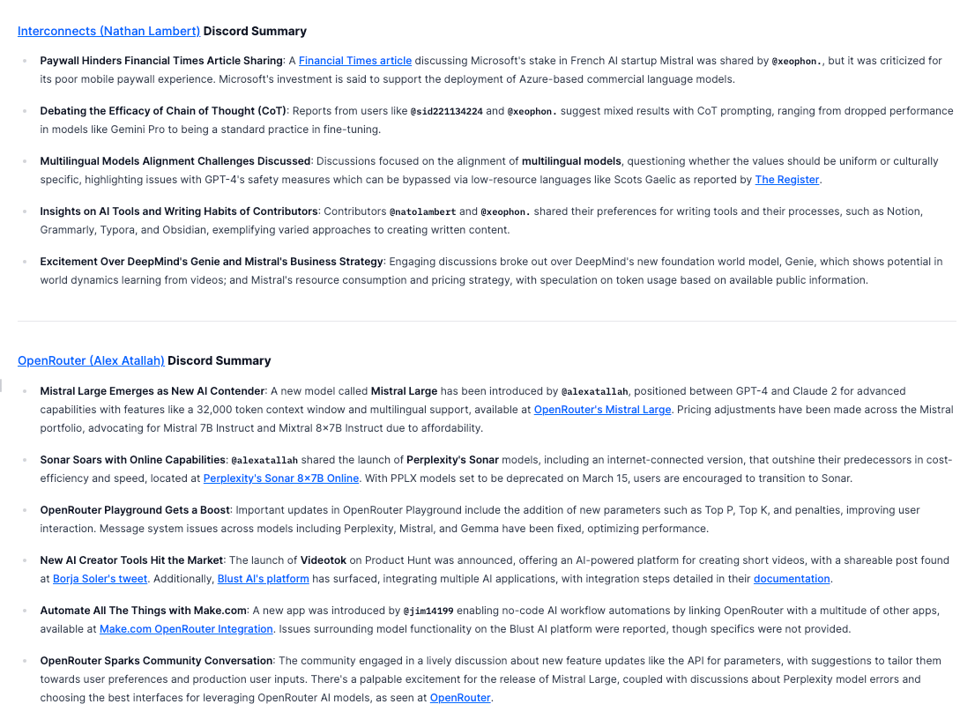

Interconnects (Nathan Lambert) ▷ ideas-and-feedback (3 messages):

- Acknowledgment and understanding of previous discussions.

Interconnects (Nathan Lambert) ▷ news (85 messages🔥🔥):

- Financial Times Article Locked Behind Paywall: @xeophon shared a paywalled Financial Times article.

- Microsoft's Stake in Mistral: @philpax described Microsoft's investment in Mistral.

- Mistral Announces New Optimized Model: @xeophon highlighted Mistral Large, a top-tier reasoning model.

- Closed or Open - The Survival Dilemma of AI Companies: Discussion on Mistral's equity strategy.

- Reactions to Le Chat and Mistral's Strategies: Discussion on Mistral's pricing strategy and sustainability of AI startups.

Summary Links:

- Bringing open AI models to the frontier: Insights on Mistral AI's initiatives.

Interconnects (Nathan Lambert) ▷ other-papers (14 messages🔥):

- Discussion on the performance of Gemini pro with Chain of Thought.

Interconnects (Nathan Lambert) ▷ ml-questions (21 messages🔥):

- Discussions on alignment in multilingual models.

Interconnects (Nathan Lambert) ▷ random (54 messages🔥):

- Discussions on DeepMind's Genie, CoT effectiveness, Mistral's resource consumption, and models for language selection.

Summary Links:

- Various tweets and papers related to discussions.

OpenRouter (Alex Atallah) ▷ #general (152 messages🔥🔥)

Enthusiastic Feedback for New Features:

User @wikipediadotnet expressed excitement about the new parameter feature and suggested improvements like an API for parameters and consideration of how model default settings affect user preferences.

Community Brainstorms Feature Enhancements:

@cupidbot.ai requested a 'use_default_params' feature that applies median optimal parameters after a model has been live for a certain period. They also recommended giving weight to input from users with high-paid volumes as they are likely production users.

Anticipation Builds Up for Mistral Large:

Multiple users like @billbear and @louisgv discussed the anticipation and eventual release of Mistral Large on OpenRouter, highlighting @alexatallah's engagement in the rollout and user feedback on server errors post-launch.

Perplexity Alternation Puzzles Users:

Users @mostlystable and @lynxplayz experienced error 400 with Perplexity models on OpenRouter, which was being looked into and addressed by @louisgv to improve compatibility with existing workflows.

Users Seek the Ideal Interface:

User @jamesm6228 inquired about the best UI for using all OpenRouter AI models like Typing Mind, and @louisgv engaged to understand their feature needs and suggest alternatives.

CUDA Mode - Torch

Here in the CUDA Mode - Torch section, users discuss various topics related to PyTorch and CUDA. User @briggers shares insights on reducing compile times using cpp_extension.load_inline. Additionally, the history of PyTorch, its relation with Torch, and its origins are explored. Discussions also include guidance on custom Triton kernels integration with torch.compile and issues related to compiler limitations in generating Fast Approximate (FA) optimizations while maintaining numerical stability. The community warmly welcomes the presence of significant contributors like Andreas Köpf.

CUDA MODE ▷ #algorithms

Insight into Improved Softmax Performance:

- @marksaroufim shared a link to a paper (Efficient Softmax Approximation on GPUs) that elucidates the softmax trick in flash attention, highlighting a specific local correction technique (e ^ {m_{j-1} - m_j}) that maintains the global softmax operation.

Exploring the Base2 Trick:

- @andreaskoepf pointed out a GitHub link (softmax_base2_trick.ipynb) explaining the base2 trick for normal softmax, which is also applicable to incremental softmax as implemented in OpenAI's Triton example (06-fused-attention.py).

Backward Pass Automation in Triton:

- @marksaroufim mentioned the desire for automatic generation of backward passes from a given forward pass in Triton.

Minor Performance Gains through Compiler Optimization:

- Discussion on compilers handling optimizations and awareness of tricks in the Triton example.

Quake3 Algorithm Recalled in Discussion:

- @iron_bound brought up the historical fast inverse square root trick used in Quake3 with an emphasis on clever optimizations in computational algorithms.

LLM Plugin and Improvements in Streaming, Packaging Tutorial, Chat UI, and GPU Choice

- LLM Plugin Brings Groqcloud to Python Devs: @angerman. shared that the LLM plugin for accessing Groqcloud models is now available, providing instructions for installation and how to obtain an API key. The plugin allows use of models like

groq-llama2andgroq-mixtral, with an example given for generating pet names. - Python Packaging Tutorial for the Uninitiated: @0xgrrr provided @angerman. with a guiding tutorial on how to package and upload a Python project to PyPI, encouraging them that the process is straightforward.

- LLM Plugin Improves with Streaming Support: An update from @angerman. mentioned new streaming functionality for the LLM plugin, expressing gratitude to @746595581086138409 for their contribution to this enhancement.

- Datasette Developer Hints at Upcoming Chat UI: @simonw revealed that a chat UI for LLM is in the works, although it's still a work-in-progress with no set completion date.

- Datasette's Choice: Why Fly GPUs?: @simonw explained to @kiloton9999 that the choice of using Fly GPUs is due partially to their company sponsoring Datasette development, and also because their GPUs have the ability to scale to zero.

LLM Perf Enthusiasts AI Discussion Highlights

LLM Perf Enthusiasts AI Discussion Highlights

- FireFunction V1 ignites the scene: A new model with GPT-4-level structured output and decision-routing discussed by @sourya4.

- Exploring the Best in Function Calling: @yikesawjeez lists Gorilla OpenFunctions, NexusRaven, and Litellm Function Calling Wrapper.

- R2R Sets New Industry Benchmark: @emrgnt_cmplxty announces the launch of R2R, a framework for rapid development of production-ready RAG systems.

- GPT-4 Tackles Drug Information: User @thebaghdaddy successfully utilizes GPT-4 to generate informative cards on drug information.

- The Pain of Latency: @res6969 expresses concerns about latency in OpenAI APIs and seeks solutions in hosting.

- Gemma Enhanced with Turn Tokens: @imonenext integrates turn tokens into the Gemma model for further instruction/RL fine-tuning.

FAQ

Q: What models and optimizations are being discussed in TheBloke Discord Summary?

A: Engineers are troubleshooting Polymind's UI bug and discussing model comparisons and optimizations like Mistral AI and GGUF quantized models.

Q: What is Mistral Large and how does it compare to GPT-4 according to the TechCrunch article?

A: Mistral Large is a new model from Mistral AI positioned to compete with OpenAI's GPT-4, offering cost-effectiveness and uncensored content, and is now available on Azure.

Q: What were the conclusions regarding intellectual property protection in prompts?

A: Users deliberated that while copyright might cover exact wording, the replication of ideas via linguistic variation is likely unstoppable.

Q: What methods were discussed for text classification in the summaries?

A: Discussions included starting with the base model and Assistant for text classification to sort texts into categories such as 'Factual' and 'Misleading', bypassing fine-tuning.

Q: What was the hot topic regarding meta-prompting and what concerns were raised?

A: The concept of meta-prompting was discussed, with claims of generating extensive documentation from advanced techniques, but also raised security flags when a user shared a PDF, resulting in the user's account action.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!