[AINews] The Last Hurrah of Stable Diffusion? • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Discord Recap

High-level Discord Summaries

Discord Community Highlights

Langchain AI Discord

LLM Finetuning (Hamel + Dan) 🔥: Charles-Modal, Predibase, OpenPipe, and more

Perplexity AI Sharing

HuggingFace ▷ # Computer Vision

Eleuther Discord Discussions

Interconnects (Nathan Lambert) News

World-Sim

OpenAccess AI Collective (axolotl) ▷ # general (6 messages)

LangChain AI General & Updates

AI Twitter Recap

AI Twitter Recap

- AI Models and Architectures

- Llama 3 and Instruction Finetuning: @rasbt found Llama 3 8B Instruct to be a good evaluator model that runs on a MacBook Air, achieving 0.8 correlation with GPT-4 scores. A standalone notebook is provided.

- Qwen2 and MMLU Performance: @percyliang reports Qwen 2 Instruct surpassing Llama 3 on MMLU in the latest HELM leaderboards v1.4.0.

- Mixture of Agents (MoA) Framework: @togethercompute introduces MoA, which leverages multiple LLMs to refine responses. It achieves 65.1% on AlpacaEval 2.0, outperforming GPT-4o.

- Spectrum for Extending Context Window: @cognitivecompai

Discord Recap

- Stable Diffusion 3 Release and Discussions: Stability.ai released the open weights for Stable Diffusion 3 Medium, users discuss VRAM requirements for Qwen2 models, fine-tuning challenges, and efficient GPU utilization. 2. LLM Advancements and Benchmarks: Google unveils RecurrentGemma 9B, ARC Prize announced for AGI benchmark, Scalable MatMul-free Language Modeling, and discussions on model performances. 3. Collaborative LLM Development and Deployment: Users seek guidance on installing and integrating models like Mistral-instruct, Modal, and queries on LLM finetuning using techniques like Qlora. 4. Hardware Optimization and Resource Management: Discussions on building GPU rigs, custom CUDA development efforts, Scalable MatMul-free Language Modeling implementation, and CUDA Performance Checklist re-release with video, code, and slides.

High-level Discord Summaries

-

Stable Diffusion 3 Unleashes Potentials and Problems: Users report better quality and advanced prompt comprehension but struggles with human anatomy accuracy, mixed reactions to performance, and concerns over installation and finetuning.

-

Licence to Confuse: Debate over the licensing terms of SD3 and restrictions on commercial use being too limiting.

-

Photorealism Promise Meets Skepticism: Efforts to enhance realism face consistency issues compared to older versions.

-

Resource Effectiveness Favorable, But Customization Could Be Costly: Efficient GPU utilization appreciated, but concerns over financial and technical barriers exist.

-

Installation Integration Anxiety: Issues integrating SD3 into frameworks prompts collaborative troubleshooting efforts.

-

Zoom Stumbles, but Blog Shines: Technical issues delayed Zoom sessions, but Nehil's blog post on budget categorization was praised.

-

Pooling Layer Puzzles and Scope-creep Concerns: Discussions on adding pooling layers to models and debates around broad-scope chatbots versus scoped interfaces.

-

Quantum of Quantization: Excitement over optimizer quantization research and drive to balance model efficiency and calculation precision.

-

Crediting Sparks Queries: Inquiries regarding missing credits from platforms like OpenAI and discussions on practicality of different chatbots.

-

Modal Makers, Datasette Devotees, and Chatbot Chumminess: Promise of finetuning LLMs for Kaggle competitions, Datasette's utility, and AI dialogue models' integration implications.

-

Smartwatches Clash with Tradition: Playful debate on smartwatch dominance versus traditional watches.

-

Perplexity AI Grapples with Tech Issues: Reports of file upload and image interpretation problems, discussions on language models performance, and allegations of plagiarism.

-

Forbes Flames Perplexity for Plagiarism: Forbes article alleges plagiarism by Perplexity AI, highlighting ethical concerns.

-

AI Ethics and Industry Progress Snapshot: Discussions on ethical implications of Nightshade technology, Perplexity AI's advancements, and tech changes like ICQ shutdown.

-

API Integration Steps Confirmed: Confirmations on setting up Perplexity API for integration.

Discord Community Highlights

- Revising CC12M dataset with LlavaNext Expertise: CC12M dataset gets a facelift using LlavaNext and is now on HuggingFace.

- Global Debut of TensorFlow-based Library: A new TensorFlow-centric ML library capable of parallel and distributed training is launched.

- TPUs in Mojo’s Future: Discussions on Mojo’s potential use of TPUs.

- Transparent AI with Uncensored Models: Interest in uncensored models rises among users.

- Custom Metrics for LLMs via DeepEval: DeepEval enables custom evaluation metrics for LLMs.

- WizardLM-2’s Affordability and Efficiency: Insights on WizardLM-2’s affordability and efficiency.

- Parsing ARC Prize and AI Benchmarking: Discussion on the ARC Prize for AI benchmarking.

- Google’s RecurrentGemma 9B: Google’s RecurrentGemma 9B promises swift processing of lengthy sequences.

Langchain AI Discord

Apple Interfacing Prospects with LORA Analogue:

Apple's adapter technology has been compared to LORA layers, suggesting a dynamic loading system for local models to perform a variety of tasks.

The Contorting Web of HTML Extraction:

AI engineers explored different tools for HTML content extraction, like htmlq, shot-scraper, and nokogiri, with Simonw highlighting the use of shot-scraper for efficient JavaScript execution and content extraction.

Shortcut to Summation Skips Scrutiny:

Chrisamico found it more efficient to bypass technical HTML extraction and directly paste an article into ChatGPT for summarization, foregoing the need for a complicated curl and llm system.

Simon Says Scrape with shot-scraper:

Simonw provided instructions on utilizing shot-scraper for content extraction, advocating the practicality of using CSS selectors in the process for those proficient in JavaScript.

Command Line Learning with Nokogiri:

Empowering engineers to leverage their command line expertise, Dbreunig shared insights on using nokogiri as a CLI tool, complete with an example for parsing and extracting text from HTML documents.

LLM Finetuning (Hamel + Dan) 🔥: Charles-Modal, Predibase, OpenPipe, and more

This section highlights discussions within different channels related to LLM Finetuning. It includes topics such as a user inquiring about accessing their LangSmith credits, requests for additional credits, clarifications on credit policies, and discussions around specific tools and models like Datasette, vLLM, mistral-instruct, and more. There are also mentions of issues with missing credits, resolving access problems, and exploring various research papers and resources related to fine-tuning models. Overall, the conversations cover a range of topics from technical queries to community interaction and sharing of insights.

Perplexity AI Sharing

Perplexity AI offers advantages over SearXNG: A discussion in German language focused on the advantages of Perplexity AI over SearXNG, highlighting its unique features for professional fields. Nightshade technology ethical concerns were raised, emphasizing potential misuse and technical challenges. ICQ, a notable instant messaging service, is shutting down after 28 years. Perplexity AI shared industry updates including Raspberry Pi's London IPO and General Motors' Texas self-driving efforts. The section also included discussions on Torch caching validation, warm-up for CUDA libraries, and interest in 8k or 16k benchmarks.

HuggingFace ▷ # Computer Vision

Semantic search appreciated in the space

A user expressed gratitude, saying "This is great... especially with the semantic search. Thanks for creating this space."

Request for computer vision project ideas

Another user asked for suggestions on "awesome project ideas in the computer vision domain."

CCTV smoking detection project suggested

A response to the project idea request suggested creating a system for "detection of a man smoking in CCTV surveillance" with a feature to make the bounding box red if the confidence is greater than 0.9.

Eleuther Discord Discussions

This section provides insights into various discussions happening on the Eleuther Discord server. Members are engaged in conversations ranging from sharing projects like the RIG library for Rust integration with Cohere to discussing models like LlamaGen for image generation. They are also exchanging ideas on research topics, such as limitations in transformers and the novelty of papers like Samba. Furthermore, discussions extend to hardware topics like GPU choices for model performance and costs, along with practical implementations like integrating GIT into sys prompts in LM Studio.

Interconnects (Nathan Lambert) News

The section discusses various topics related to technology and AI. It covers the announcement of the ARC Prize for AGI, reactions to podcast interviews, industry awareness of benchmarks, critiques on TechCrunch articles, and discussions on human intelligence and genetics. Additionally, it delves into the unexpected partnership deal between Microsoft, OpenAI, and Tesla, as well as advancements in AI and development challenges. Links to resources such as the ARC Prize and a tweet from Kyle Wiggers are also provided.

World-Sim

An update has been released to simplify writing and editing longer console prompts for both mobile and desktop platforms, promising a smoother interaction experience. Additionally, there was an inquiry about the open-source status of WorldSim, but no further information was provided in the messages.

OpenAccess AI Collective (axolotl) ▷ # general (6 messages)

- DeepEval integrates custom LLM metrics effortlessly: Users can define custom evaluation metrics for LLMs in DeepEval, including metrics like G-Eval, Summarization, Faithfulness, and Hallucination. For more details on implementing these metrics, check the documentation.

- Uncensored models offer unfiltered responses: Discussion on the purpose of new uncensored models lacking filters or biases, with users preferring them for transparent responses.

- WizardLM-2 pricing sparks curiosity: Questions on how WizardLM-2 operates profitably at a low cost, with explanations on model efficiency and GPU rental.

- Self-hosting vs. using providers for LLMs: Debate on the challenges and costs of self-hosting models versus service providers like OpenRouter.

- Batch inference could justify GPU rental: Members discuss the effectiveness of renting GPUs for batch inference tasks over single requests and suggest using tools like Aphrodite-engine / vllm for optimization.

LangChain AI General & Updates

- LangChain Postgres documentation confusion: A member faced issues with LangChain Postgres documentation lacking a checkpoint.

- Combining chains: Users discussed combining chain outputs in one call, exploring methods like RunnableParallel.

- Error using GPT-4: Error reported when using GPT-4 with langchain_openai, guidance given to use ChatOpenAI instead.

- Apps on Torchtune: Members can now add apps to their account starting June 18, enabling enhanced server management and safety features.

FAQ

Q: What is Llama 3 and its performance in Instruction Finetuning?

A: Llama 3 is a model that achieved 0.8 correlation with GPT-4 scores in Instruction Finetuning, running on a MacBook Air.

Q: What is Qwen2 and how does it perform compared to Llama 3?

A: Qwen 2 Instruct surpassed Llama 3 on MMLU in the latest HELM leaderboards v1.4.0.

Q: What is the MoA framework and how does it improve responses?

A: The Mixture of Agents (MoA) framework leverages multiple LLMs to refine responses and outperforms GPT-4o with a 65.1% score on AlpacaEval 2.0.

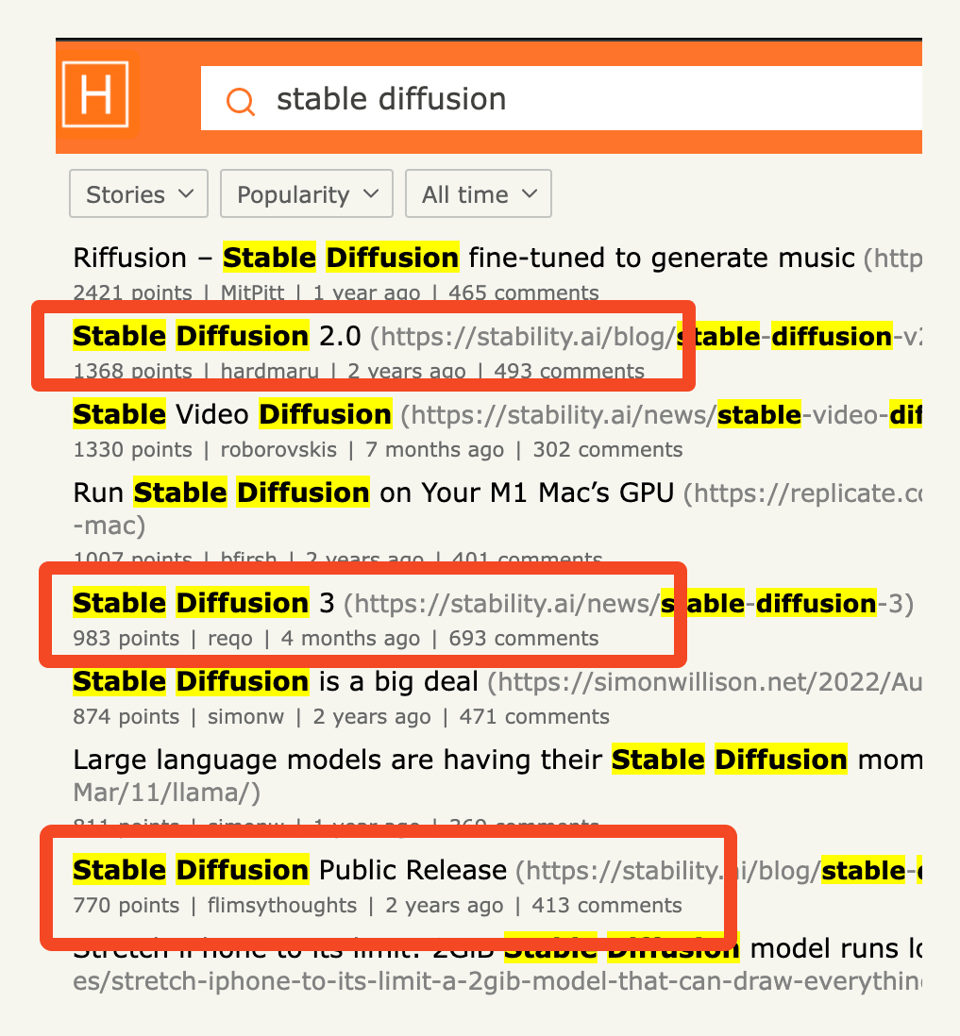

Q: What are the key discussions surrounding Stable Diffusion 3?

A: Stability.ai released open weights for Stable Diffusion 3 Medium, users discussed VRAM requirements, fine-tuning challenges, and efficient GPU utilization.

Q: What are the concerns raised regarding the licensing terms of Stable Diffusion 3?

A: Debate exists over the licensing terms of SD3, with concerns about restrictions on commercial use being too limiting.

Q: What challenges are users experiencing with the installation and integration of Stable Diffusion 3?

A: Issues integrating SD3 into frameworks have prompted collaborative troubleshooting efforts.

Q: What advancements were unveiled by Google in terms of models and benchmarks?

A: Google revealed RecurrentGemma 9B and discussed ARC Prize for AGI benchmark, Scalable MatMul-free Language Modeling, and model performance benchmarks.

Q: What are the benefits of DeepEval in relation to LLMs?

A: DeepEval enables users to define custom evaluation metrics for LLMs, including metrics like G-Eval, Summarization, Faithfulness, and Hallucination.

Q: What are the ethical concerns raised about Nightshade technology?

A: Discussions highlighted ethical implications of Nightshade technology, emphasizing potential misuse and technical challenges.

Q: What discussions are happening regarding uncensored models and their responses?

A: Users are discussing the purpose of uncensored models that lack filters or biases, with a preference for transparent responses.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!