[AINews] Claude 3 just destroyed GPT 4 (see for yourself) • ButtondownTwitterTwitter

Chapters

Claude 3 Overview

AI Twitter

LAION Discord

Claude 3 on OpenRouter

Fine-tuning Troubles and Model Training

OpenAI API and Mistral AI Discussions Continuation

Quant Tests and Mistral Showcase

Nous Research AI: General Messages

Discussion on Various Topics in Eleuther Discord Channels

Various LM Studio Discussions

Diffusion Discussions

General Discussions on AI Models and Techniques

Cuda Mode Messages

Empowering Long Context RAG with LlamaIndex Integration

LangChain AI - Share Your Work

LangChain AI Tutorials - Messages

DiscoResearch

Claude 3 Overview

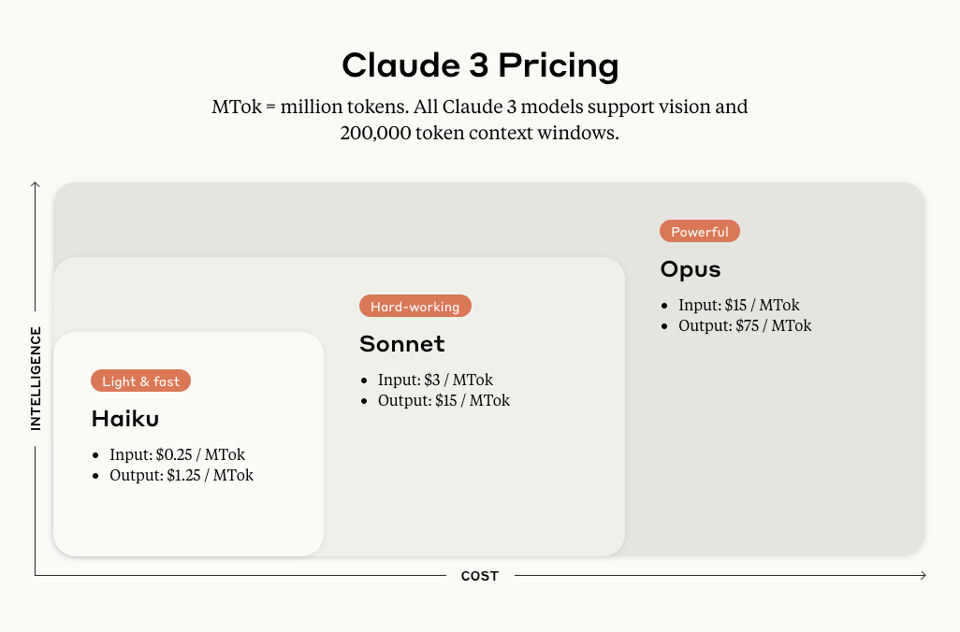

The section provides an overview of Claude 3, a new model that has outperformed GPT 4 in multiple benchmarks. Claude 3 comes in three sizes - Haiku, Sonnet, and Opus, with Opus being the most powerful. The models are multimodal, have sophisticated vision capabilities, and excel in tasks like turning a 2hr video into a blogpost. Claude 3 offers long context with near-perfect recall and is easier to use for complex instructions and structured outputs. The models also exhibit better safety measures and have received positive evaluations in various domains like finance, medicine, and philosophy. Overall, Claude 3 demonstrates superior performance compared to GPT 4 in tasks such as coding a discord bot and GPQA, impressing benchmark authors and users with its capabilities.

AI Twitter

The section discusses various updates and insights related to AI progress, capabilities, investments, business aspects, safety, regulation, memes, and more from the AI Twitter space. Highlights include quotes and views from prominent figures like Sam Altman and François Chollet, insights on Google's Gemini 1.5 Pro, discussions on AI investments from Softbank and Nvidia, as well as Elon Musk's lawsuit. The section also covers humorous anecdotes, regulatory concerns, and cutting-edge research findings in the AI field.

LAION Discord

HyperZ⋅Z⋅W Operator Shaking Foundations

- Introduced by @alex_cool6, the #Terminator network blends classic and modern technologies, utilizing the novel HyperZ⋅Z⋅W Operator. Full research available here.

Claude 3 Attracts Attention

- Heating discussions around the Claude 3 Model performance benchmarks, with a Reddit thread showcasing community investigation.

Claude 3 Outperforms GPT-4

- @segmentationfault8268 found Claude 3 excelling in dynamic response and understanding, potentially drawing users from existing ChatGPT Plus subscriptions.

CUDA Kernel Challenges Persist with Claude 3

- Despite advancements, Claude 3 seems to lack improvement in non-standard tasks like handling PyTorch CUDA kernels, noted by @twoabove.

Sonnet Enters VLM Arena

- Sparking interest, Sonnet is identified as a Visual Language Model (VLM), compared with giants like GPT4v and CogVLM.

Seeking Aid for DPO Adjustment

- @huunguyen calls for collaboration to refine Dynamic Programming Optimizer (DPO). Interested collaborators can connect via direct message.

Claude 3 on OpenRouter

The much-anticipated Claude 3 AI has been released, with an exclusive mention of an experimental self-moderated version being available on OpenRouter. LLM Security Game sparks caution in attempting to deceive GPT3.5 into exposing a secret key. Discussions and reactions to the comparison between Claude 3 and GPT-4 are ongoing, with users noting Claude 3's text comprehension superiority in tests. The debate heats up among AIs regarding various Claude 3 variants' capabilities, such as Sonnet outperforming Opus in some instances. The community detects potential AI deterioration in Gemini Ultra over time, leading to discussions on model performance. Links to OpenRouter with Claude 3.0, LLM Encryption Challenge on Discord, and Claude Performance Test Result Image are provided.

Fine-tuning Troubles and Model Training

This section covers various user interactions on fine-tuning AI models and challenges faced during the process. Users discuss issues with fine-tuning models like the OpenOrca Mistral 7b model, mention difficulties with implementing SD and Lookup decoding in server APIs limiting model capabilities, and share experiences with training models like the Mamba LM chess model. Additionally, there are conversations on cosine similarity cutoffs, the use of special tokens in datasets, and seeking guidance for fine-tuning small to medium-sized language models. Links to resources and tools related to model training are also shared.

OpenAI API and Mistral AI Discussions Continuation

- Puzzle Prompt Engineering Saga Continues: Users are still struggling with crafting effective prompts for an AI vision puzzle involving an owl and a tree, with issues persisting in accurately interpreting the image using GPT-V. Discussions are ongoing about the model's limitations and the potential need for retraining.

- The Highs and Lows of Model Behavior: Despite nuanced prompts achieving some success, GPT-V consistently misinterprets measurements in images, particularly struggling with distinguishing between units and heights, showcasing a notable weakness in the model.

- Playful Competition Heats Up: Users engage in humorous banter and challenges aimed at outperforming the AI's current understanding of complex images, with occasional correct responses leading to light-hearted moments.

- Sharing Knowledge Comes at a Cost: Concerns are raised about auto-moderation limitations in the Discord server hindering discussions on certain details and prompting a call for OpenAI to revise prompt restrictions for a more transparent and productive engineering dialogue.

- Newcomer Queries and Tips Exchange: New participants seek advice on prompt engineering and AI training for educational purposes, with seasoned users offering guidance on adhering to platform terms of service and best practices for prompt writing.

Links mentioned:

- Terms of use: no description found

- DALL·E 3: DALL·E 3 understands significantly more nuance and detail than previous systems, enabling easy translation of ideas into accurate images.

Quant Tests and Mistral Showcase

This section discusses 2 quant tests designed for long-context scientific reasoning and medium-length report writing without providing specific performance metrics. In the Mistral showcase, various projects leveraging Mistral models are highlighted, including collaborative AI for offline LLM agents, a feature-rich Discord bot, and an issue with Mistral-large's formatting flaws. Users shared repositories and experiences related to Mistral models in different applications.

Nous Research AI: General Messages

Nous Research AI

-

AI for Music: User @audaciousd expressed interest in new music generative AI, particularly from a company called stabilities, seeking information from others.

-

Claude 3 Generates Buzz: Users like @fibleep, @4biddden, and @mautonomy discussed Claude 3's release and its comparison to GPT-4.

-

GPT-4 vs. Claude 3 Opinions: User @teknium initiated Twitter polls to gather opinions on whether Claude 3 Opus is better than GPT-4.

-

B2B Sales Strategies Shared: User @mihai4256 sought advice on selling B2B software products, receiving insights on targeting small businesses and engagement strategies.

-

Knowledge Graph Building Resources Explored: Users @mihai4256 and @everyoneisgross discussed knowledge graph creation, with a suggestion to use Hermes for structured data extraction.

Links mentioned: Various links to tweets, AI products, and discussions.

Discussion on Various Topics in Eleuther Discord Channels

In this section, various discussions took place in different Eleuther Discord channels. One conversation revolved around contrastive learning for unanswerable questions in SQuADv2, with suggestions for creating negative samples and concerns over RLHF's impact on models' capabilities. Another highlighted the Terminator network's potential as a game-changer in AI, proposing a new architecture for full context interaction. In a separate discussion, users explored creative uses of Figma for animation and debated the efficiency of architectures like Mamba for learning tasks. These discussions showcase a wide range of topics and ideas shared among participants in the Eleuther Discord channels.

Various LM Studio Discussions

This section highlights various discussions that took place in LM Studio groups. It includes inquiries about using different models directly, dealing with errors and support channels, hardware discussions focusing on VRAM usage and Apple's unified memory architecture, and challenges faced when running LM Studio on integrated GPUs. Additionally, there are conversations about potential model upgrades, Autogen integration issues, and the use of different hardware setups for AI work.

Diffusion Discussions

Duplicate Scheduler Names Misleading:

- Identified a bug with diffusers where scheduler names display incorrectly, showing EulerDiscreteScheduler instead of LCMScheduler after updating the scheduler. The issue was raised on GitHub #7183, and a temporary fix involves using explicit print statements to confirm the correct scheduler class.

Bug Fix for Scheduler Misnaming:

- Shared a GitHub pull request #7192 by yiyixuxu aimed at fixing the scheduler class-naming bug in diffusers. The pull request contains the corrected code for the scheduler issue.

How-to for Image-Inpainting with

General Discussions on AI Models and Techniques

In this section, participants engaged in discussions about various AI models and techniques. Topics included image inpainting using Hugging Face's Diffusers, installation updates via GitHub pull requests, setting LoRa weights, handling NSFW generation models, model training challenges, AI-generated music quality, AI-generated art challenges, technical and ethical issues in AI research, and personal value found in AI models like Pony. The discussions also touched on the importance of proper model training, challenges with training approaches, leveraging intelligently designed backing tracks for music generation, and the implications of AI technologies on individual well-being. Links shared include resources on image inpainting, the importance of tokenization in AI, and upcoming AI conferences.

Cuda Mode Messages

CUDA MODE ▷ #torch (3 messages):

- New PyTorch Dev Podcast Episode Alert: @andreaskoepf shared a link to a new episode of the PyTorch Developer Podcast discussing AoTInductor.

- Troubleshooting CUDA Kernel for Histograms: @srns27 is seeking help with a CUDA kernel they've written; the intended function is to create a parallel histogram, but they're experiencing inconsistent results with gpuAtomicAdd. They question why atomicAdd is not functioning correctly within their kernel code.

- Podcast Enthusiasm Shared: @ericauld expressed enjoyment of the new PyTorch Developer Podcast episodes, appreciating their concise format.

CUDA MODE ▷ #announcements (1 messages):

- Tune in for CUDA Gotchas: @andreaskoepf alerted @everyone that CUDA-MODE Lecture 8: CUDA performance gotchas is starting soon, promising tips on maximising occupancy, coalescing memory accesses, and minimizing control divergence with live demos included. The lecture is scheduled for 1709409600.

CUDA MODE ▷ #suggestions (5 messages):

- Shrinking SRAM Discussed on Asianometry: User @iron_bound shared a YouTube video titled 'Can SRAM Keep Shrinking?' from Asianometry.

- Praise for Asianometry's Insightful Content: @apaz praised the Asianometry channel, recommending it after following the content for about a year.

- CUDA Programming Resource Shared: @ttuurrkkii. posted a GitHub repository link as a helpful resource for beginners in CUDA parallel programming and GPUs.

- Video Walkthrough of Building GPT: Another contribution from @iron_bound was a YouTube video explaining how to build a GPT model, following important papers and techniques from OpenAI's research.

CUDA MODE ▷ #jobs (4 messages):

- Join Lamini AI’s Mission to Democratize Generative AI: @muhtasham shared an opportunity with Lamini AI which is seeking HPC Engineers to optimize LLMs on AMD GPUs.

- Quadrature Seeks GPU Optimization Engineer: @d2y.dx2 highlighted an opening at Quadrature for an engineer specialized in optimizing AI workloads on GPUs in either London or New York.

CUDA MODE ▷ #beginner (11 messages🔥):

- CUDA Troubles in Google Colab: User @ttuurrkkii. expressed difficulties in making CUDA work in Google Colab.

- Lightning AI to the Rescue?: In helping @ttuurrkkii., @andreaskoepf recommended trying out Lightning AI studios as a potential solution for CUDA issues on Google Colab.

- Setting Up CUDA on Kaggle: User .bob mentioned the need to set up CUDA on Kaggle for working with multi-GPU environments.

- C or CPP for CUDA and Triton?: @pyro99x inquired about the necessity of knowing low-level languages like C or C++ for working with Triton and CUDA. @briggers clarified the requirements.

- Triton for Performance Maximization: Following up on the discussion about Triton, @briggers suggested ways to enhance performance.

- C from Python in CUDA-Mode: To address @pyro99x's query about Python-friendly ways to work with Triton and CUDA, @jeremyhoward mentioned relevant resources.

- How to Install Cutlass Package: @umerha asked about how to install and include the CUTLASS C++ package.

CUDA MODE ▷ #youtube-recordings (5 messages):

- Lecture 8 Redux Hits YouTube: @marksaroufim shared a lecture titled CUDA Performance Checklist on YouTube, including the code samples and slides.

- Gratitude for Re-recording: @andreaskoepf and @ericauld expressed their thanks to @marksaroufim for re-recording Lecture 8.

- Rerecording Takes Time: @marksaroufim mentioned the surprise that re-recording the lecture still took 1.5 hours.

CUDA MODE ▷ #ring-attention (53 messages🔥):

- Ring Attention in the Spotlight: @andreaskoepf highlighted a discussion about Ring Attention and Striped Attention on the YK Discord.

- Exploring Flash Decoding for LLMs: @andreaskoepf expressed interest in trying out Flash Decoding, a method for improving inference efficiency in Large Language Models (LLMs).

- Diving into Flash-Decoding and Ring Attention Implementation: @iron_bound and @andreaskoepf delved into the specifics of Flash-Decoding, discussing steps like log-sum-exp, references in the code, and comparing to solutions such as softmax_lse.

- Clarifying Flash-Decoding Details: Discussions by @apaz, @nshepperd, and @andreaskoepf elaborated on the workings of Flash Attention and its implementation.

- Collaborative Development and Impromptu Meetups: @andreaskoepf signaled readiness to implement initial Ring-Llama tests and other users coordinated their participation in voice chats for collaboration.

Empowering Long Context RAG with LlamaIndex Integration

- Integration of LlamaIndex with LongContext: @andysingal shared a link discussing the Empowering Long Context RAG through the integration of LlamaIndex with LongContext. The article highlights the release of Google’s Gemini 1.5 Pro with a 1M context window and its potential integration here.

LangChain AI - Share Your Work

Chat with YouTube Videos through Devscribe AI:

- User @deadmanabir introduced Devscribe AI, a project to interact with YouTube videos for summaries and key concepts without watching the whole content.

- Features include pre-generated summaries, video organization, and contextual video chat.

- A video demo and project link were provided for feedback and sharing on LinkedIn and Twitter.

Generative AI Enhancing Asset-Liability Management:

- User @solo78 discussed the role of generative AI in revolutionizing asset-liability management in the life insurance industry.

- The post detailed potential benefits with a link to the article.

Feynman Technique for Efficient Learning:

- User @shving90 shared a Twitter thread from @OranAITech about adopting the Feynman Technique for better understanding of concepts.

Introducing Free API Service with Galaxy AI:

- User @white_d3vil announced Galaxy AI, offering a free API service for premium AI models like GPT-4.

Release of Next.js 14+ Starter Template:

- User @anayatk released a Next.js 14+ starter template with modern development tools and shared the GitHub Template link.

Blog on Building Real-Time RAG with LangChain:

- User @hkdulay shared a post on constructing Real-Time Retrieval-Augmented Generation using LangChain to improve response accuracy.

Exploring Advanced Indexing in RAG Series:

- User @tailwind8960 discussed indexing intricacies in retrieval-augmented generation to enhance responses.

Duplicate Message about Steam Gift:

- User @teitei40 posted twice about a $50 Steam gift redemption link without additional context.

LangChain AI Tutorials - Messages

- Let's Decode the Tokenizer:

@lhc1921shared a YouTube video about building the GPT Tokenizer, essential for Large Language Models (LLMs). - Questionable Steam Gift Link: A user posted a dubious link offering $50 for Steam, raising concerns about legitimacy.

- Google Sharpens AI with Stack Overflow: An article announced Google's use of Stack Overflow's OverflowAPI to enhance Google Cloud.

- Sergey Brin Spotlights Google’s Gemini: A tweet featuring Sergey Brin discussing Google's AI advancements.

- Innovative AI Reflections in Photoshop: A user shared a GitHub repository for Stable Diffusion, allowing realistic reflections in images.

- Claude 3 Model Announcements Cause Stir: Discussions about Anthropic's Claude 3 model family and its impact on existing models.

- Concern Over India’s AI Deployment Regulation: Users debated India's requirement for government approval before deploying AI models.

DiscoResearch

German Semantic Similarity Boosted:

- User @sten6633 successfully enhanced semantic similarity calculations by finetuning

gbertlargefrom deepset with German domain-specific texts, converting it into a sentence transformer, and further finetuning with Telekom's paraphrase dataset. Each step resulted in significant improvement.

"AI in Production" Conference Call for Speakers:

- @dsquared70 invites developers integrating Generative AI into production to speak at a conference in Asheville, NC. Potential speakers can apply by April 30 for the event on July 18 & 19.

Claude-3's Performance in German Unclear:

- @bjoernp inquires about the performance of Anthropic's Claude-3 in German, sharing a link about it, while user @devnull0 mentions limited access and issues with German phone numbers.

Claude AI Access Issues in the EU:

- @bjoernp recalled that Claude AI is not available in the EU by sharing a location restrictions link, although @devnull0 mentions using tardigrada.io for access in December.

German Phone Number Success with Claude AI:

- Contradicting @devnull0's experience, user @sten6633 states that registering with a German mobile number was fine.

FAQ

Q: What are the different sizes of the Claude 3 model?

A: The Claude 3 model comes in three sizes - Haiku, Sonnet, and Opus, with Opus being the most powerful.

Q: What are some key features of the Claude 3 model?

A: The Claude 3 models are multimodal, have sophisticated vision capabilities, excel in tasks like turning a 2hr video into a blog post, offer long context with near-perfect recall, and are easier to use for complex instructions and structured outputs.

Q: In what domains has Claude 3 received positive evaluations?

A: Claude 3 has received positive evaluations in various domains like finance, medicine, and philosophy.

Q: How does Claude 3 compare to GPT-4 in performance?

A: Claude 3 demonstrates superior performance compared to GPT-4 in tasks such as coding a discord bot and GPQA, impressing benchmark authors and users with its capabilities.

Q: What challenges persist with Claude 3 despite advancements?

A: Despite advancements, Claude 3 seems to lack improvement in non-standard tasks like handling PyTorch CUDA kernels.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!